DeepSeek trained a state-of-the-art AI model for just $6 million—thanks to FP8 precision, smart caching, and a lean training setup. Their breakthrough challenges the idea that AI must cost hundreds of millions, exposing inefficiencies in big-name labs like OpenAI and Anthropic. This article explains how they did it, why it matters, and what it means for the future of AI development.

The $6 Million Shockwave

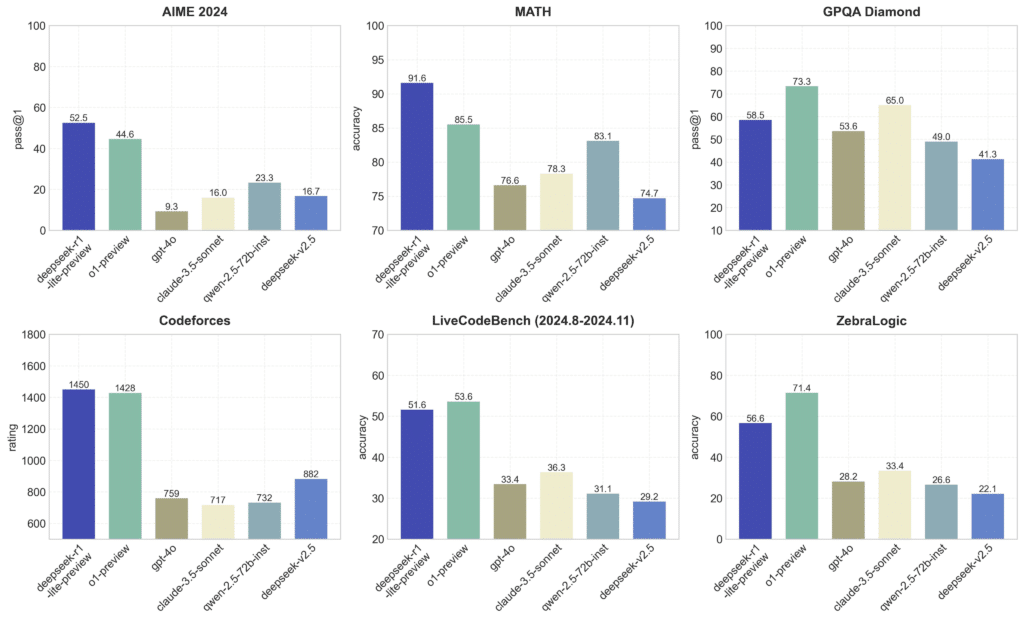

When DeepSeek announced that training their R1 model cost around $6 million, the AI industry took notice. Comparable models from OpenAI or Google DeepMind are rumored to cost hundreds of millions.

But DeepSeek isn’t playing with magic—it’s playing smart. Their ability to keep costs low comes down to efficient engineering, optimized hardware usage, and a lean operational model. They’ve rethought the entire AI training process, proving that big money isn’t the only way to build powerful models.

How DeepSeek Slashed Training Costs

Smaller Overhead, Smarter Spending

Big AI labs come with big expenses—massive teams, research grants, and endless compute bills. DeepSeek, on the other hand, runs lean and optimized. Every dollar spent goes straight into model training, not maintaining a giant research facility.

FP8 Precision: Faster and Cheaper

Most AI models use FP16 or FP32 precision, which requires more memory and compute power. DeepSeek uses FP8 precision, cutting down processing requirements without hurting accuracy. It’s a more efficient way to do the same work—less power, lower costs, same performance.

Multi-Head Latent Attention (MLA): More With Less

DeepSeek’s use of Multi-Head Latent Attention (MLA) is another game-changer. Instead of brute-forcing its way through every computation, MLA optimizes focus, reducing unnecessary processing. It’s like having a team that only works on what matters, skipping all the wasted effort.

Intelligent Caching: No Redundant Computation

DeepSeek also improves efficiency with smart caching mechanisms. This reduces the need to recompute the same data repeatedly, saving both time and compute power.

Is AI Really That Expensive? The Open-Source Debate

DeepSeek’s low training costs don’t just expose inefficiencies—they spark a bigger debate about how AI research is priced.

On one side, open-source advocates argue that big AI companies inflate costs to justify high licensing fees. If DeepSeek can train a competitive model for $6 million, why are others spending 10x or 20x more?

On the other side, critics question DeepSeek’s sustainability. Are they running at a loss to disrupt the market? Are they backed by hidden funding sources? Some suspect that DeepSeek’s approach might work short-term, but without monetization, will they survive long-term?

Are Western AI Labs Overpricing Their Models?

DeepSeek’s announcement is making people ask tough questions. If they can train a state-of-the-art model for $6 million, why do OpenAI and Anthropic need hundreds of millions?

| Model | Training Cost | Hardware | Open/Closed |

|---|---|---|---|

| DeepSeek R1 | ~$6M | H800-based (MoE) | Open |

| OpenAI GPT-4 | ??? (estimated ~$100M+) | H100 or better | Closed |

| Anthropic Claude | ??? (not public) | H100 + ??? | Closed |

DeepSeek’s cost advantage could be a wake-up call for the industry. If AI training is this much cheaper, the high costs charged by AI giants might not be about necessity, but about business strategy.

How DeepSeek’s Low Costs Could Change AI

DeepSeek isn’t just about making AI cheaper—it’s about making AI accessible. By keeping costs low and open-sourcing their model, they’re setting the stage for:

- Wider AI adoption: More businesses, startups, and researchers can now train and deploy AI without billion-dollar budgets.

- Faster innovation: Open-source models let developers worldwide improve and refine AI faster.

- Pressure on AI giants: If companies like OpenAI want to stay competitive, they may have to justify why their AI costs so much more.

Conclusion: The New Reality of AI Costs

DeepSeek’s $6 million training cost isn’t just an impressive technical feat—it’s a disruption. By proving that powerful AI can be trained for a fraction of the expected price, they’ve exposed inefficiencies in how AI is developed and monetized.

Will this force AI giants to rethink their business models? Will open-source AI take over? One thing is clear—DeepSeek just changed the conversation, and the industry will never look at training costs the same way again.

TL;DR

DeepSeek didn’t just build a model—they exposed the AI industry’s bloated pricing.

They trained their R1 model for just $6 million—using FP8 precision, MLA, and caching tricks that made it leaner, faster, and cheaper than anything OpenAI or Anthropic’s cooked up. This guide breaks down exactly how they pulled it off, why it matters, and what it means for the future of open-source AI. Whether you’re a startup, dev, or just sick of seeing billion-dollar buzzwords, this is the blueprint for smarter AI training.

Author

-

Alex started his career creating travel content for Jalan2.com, an Indonesian tourism forum. He later worked as a web search evaluator for Microsoft Bing and Google, where he spent over a decade analyzing search relevance and understanding how algorithms interpret content. After the pandemic disrupted online evaluation work in 2020, he shifted to freelance copywriting and gradually moved into SEO. He currently focuses on content strategy and SEO for finance and trading-related websites.

Recent Posts